In this post, we’ll dive in and look at how we can set up a real-world streaming application. All the steps listed in this post can be done through the Azure portal, but to make it a bit more interesting, we’ll explore doing the setup completely using Infrastructure as Code (IaC) using Azure Resource Management (ARM) Templates.

ARM Templates

ARM templates are very handy and can be used to automate the setup of cloud infrastructure. The basic syntax of the templates is JSON, but expressions and functions in JSON are also available. An ARM template consists of four sections: parameters, variables, resources and outputs. The parameter section allows a user to define inputs that will be used throughout the template at the time of deployment. The variable section consists of, as the name implies, internal variables used throughout the template, while resources that you want to be provisioned are declared in the resource section.

Building Your Own Burglar Alarm

There are tons of great use cases for Azure Stream Analytics. One that I find especially intriguing is streaming and working with IoT data. To demonstrate how straight forward it is to set up a sample application, I’ve created a simple GitHub repo that streams sensor data from a Raspberry Pi. Using a group of sensors, we can read the current state of a room (e.g. temperature, humidity, sound and light levels), and detect motion using an ultra-sonic range meter. The degree of motion can be analyzed using Azure Stream Analytics, triggering a phone call as soon as motion is detected. Essentially, we’ll be creating our own burglar alarm.

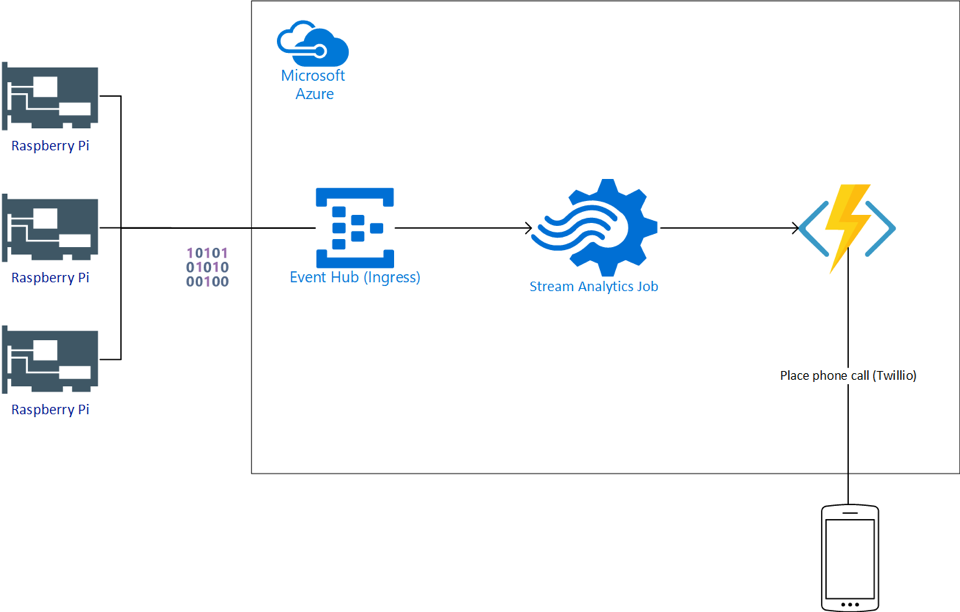

How would something like this look in Azure? The diagram below lays out the setup:

As we can see above, we’ll need a couple of components set up:

- Set up an Azure Event Hub (Ingress)

- Set up an Azure Stream Analytics Job (Analytical Engine)

- Set up one or more intelligent outputs (Egress)

- Set up the event producer (Raspberry Pi)

Azure Event Hub (Ingress)

An Azure Event Hub is a fully scalable and managed cloud service that can be used as an event ingress point. You could easily use an IoT hub for this example as well, which would allow you to do bi-directional communication (e.g. increasing or reducing the data frequency from the devices).

Any event producer, in this case, a Raspberry Pi, can push their sensor data to the Event Hub in the form of JSON.

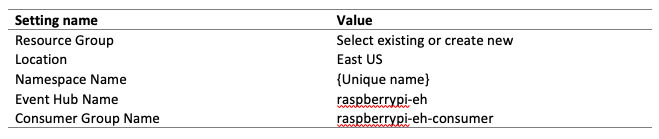

To set up an EventHub, navigate to the following ARM template and select to deploy it to Azure.

At deployment, provide the following values below, agree to terms and conditions and click purchase.

Azure Function (Egress)

So how can we notify a user with a phone call if someone has entered your house? The actual logic to detect change will reside in the Azure Stream Analytics query, but we can utilize a serverless Azure Function to call a given phone number using a third-party solution such as Twilio.

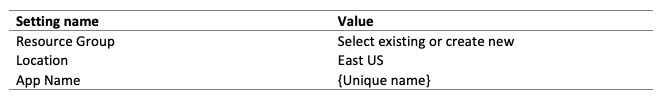

To deploy the infrastructure for the function app, navigate to the Function App ARM template and select to deploy to Azure.

At deployment, provide the following values below, agree to terms and conditions and click purchase.

The ARM template will take care of the infrastructure (skeleton). In addition to this, we need to add the muscles and fibers. Fortunately, we can utilize an already provided Azure Function from an open-source repo to call a given phone number when triggered. To publish this function to your new function app, follow the steps in our guide. The guide describes how to set up a free Twilio account which is needed for placing the calls. You only need to add the application settings for the function app that relates to the MotionDetectionFunction.

Azure Stream Analytics (Analytical Engine)

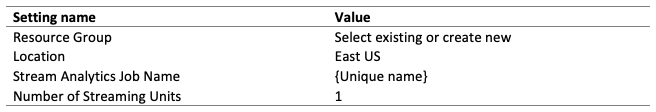

The Azure Stream Analytics job will be the analytical engine and the continuous query that will run to perform real-time analytics on the event stream produced by the Raspberry Pi. To deploy the necessary job, simply navigate to the Azure Stream Analytics ARM template and select to deploy to Azure.

At deployment, provide the following values below, agree to terms and conditions and click purchase.

Once the deployment is complete, do the following to complete the setup:

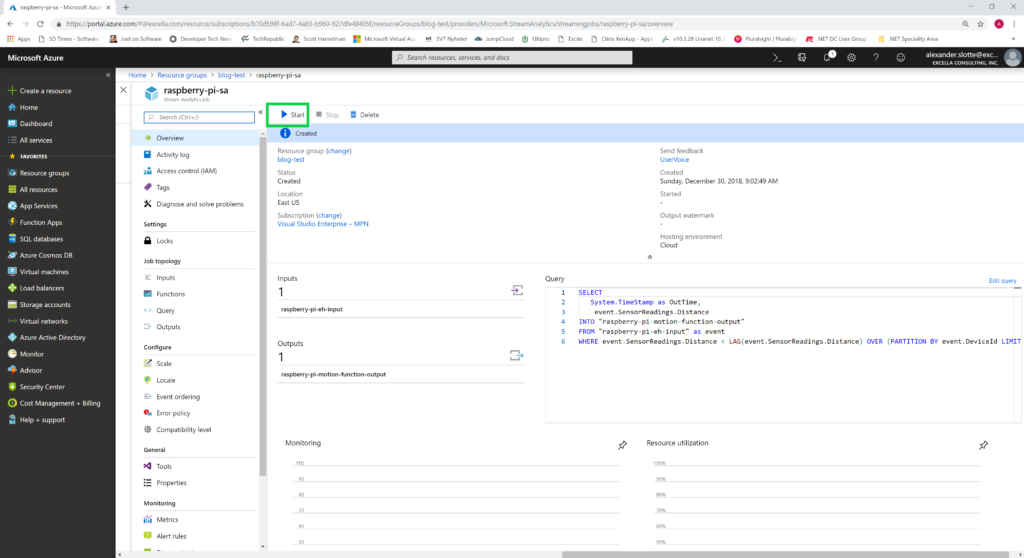

- Copy the following query (line 13-19) into the Azure Stream Analytics job.

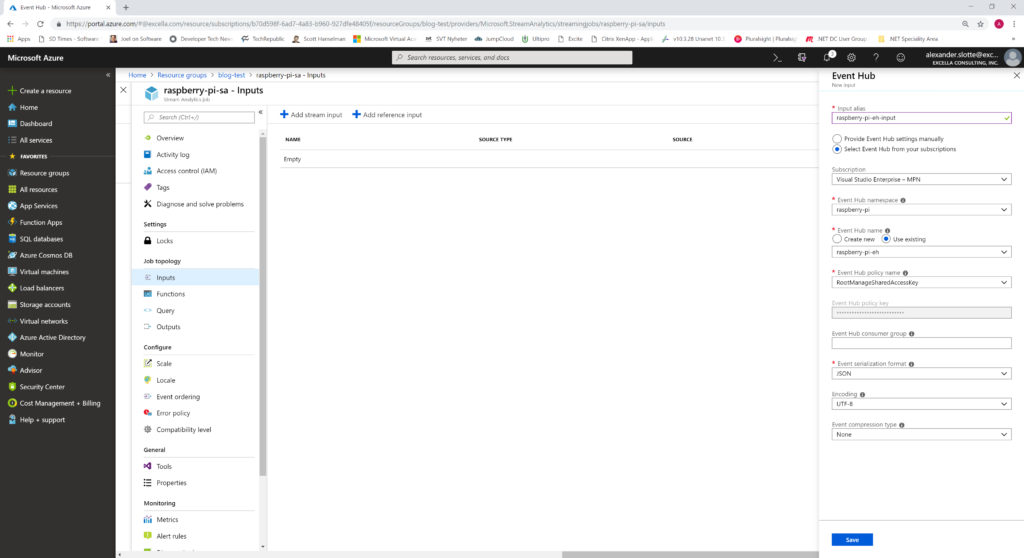

- Add a streaming input named raspberry-pi-eh-input

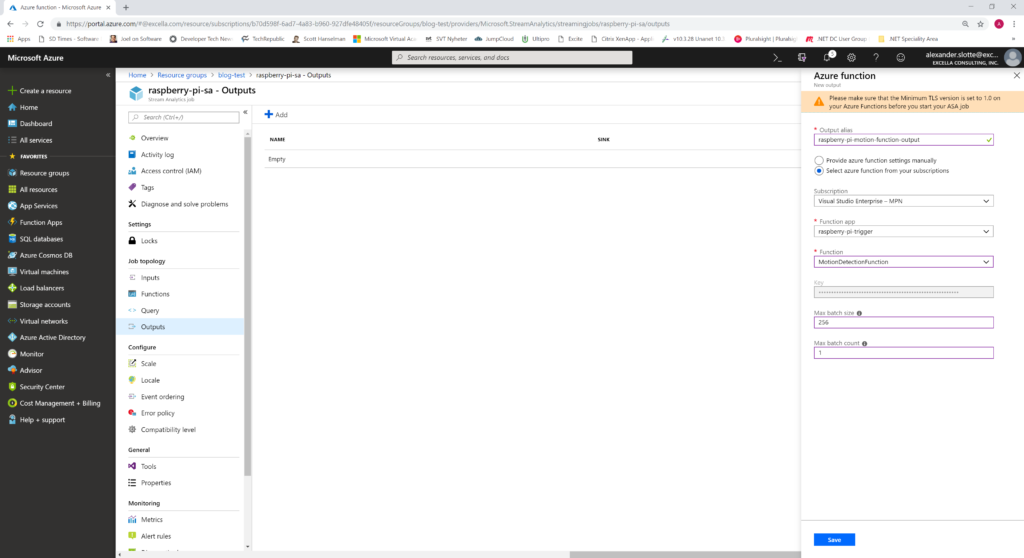

3. Add a streaming output named raspberry-pi-motion-function-output

Raspberry Pi (Event Producer)

Congratulations on making it this far! The cloud infrastructure is now set up and it’s time to tackle the event producer which will supply the stream analytics job with data to work on. A Raspberry Pi and a GrovePi are required to complete this step – follow the directions outlined here.

Don’t have a Raspberry Pi or GrovePi at hand but still want to test out your data pipeline? Not a problem, please refer to our instructions on how to set up a simulated event stream.

Summary

Our data pipeline is now ready! The final steps are to start your event producer and kick-off your Azure Stream Analytics job in Azure. If everything works as expected, your phone should ring as soon as you trigger the motion detector of your Raspberry Pi.

The possibilities for these kinds of solutions are endless. Do you want to see your data live in a Power BI dashboard, or do you want to dump your data for offline processing an Azure Data Lake or Cosmos DB? Both are now a few simple clicks away as you can continue to integrate your pipeline with other fully managed cloud services in Azure!

I hope that I have inspired you to continue to explore the world of IoT and Azure Stream Analytics. As the world grows more and more connected, streaming and acting on live data will become more and more important for a vast amount of organizations.