Security is an essential part of any machine learning (ML) model, especially when it comes to the inherent risks associated with AI. Risks of machine learning are top-of-mind concerns and pose challenges for CSOs and CIOs because ML models work with sensitive information that needs to be protected for ethical and legal reasons. This is why, when building an ML model, preventing attacks and minimizing risk are critical.

Fortunately, there are effective ML model security measures you can incorporate into DataOps and MLOps solutions that can stop attacks before they happen. The following is an overview of the most common ML model security attacks and the solutions that can prevent them.

1. Adversarial Machine Learning Attack

Adversarial machine learning attacks happen when malicious attackers aim to find small variations in the model data inputs that can result in redirected and undesired model outputs. For example, a hacker can trick an image classification neural network into an incorrect prediction by only changing one pixel in the input image. This can cause the neural network to misidentify the image with a degree of certainty. In the example below from a Cornell research report, changing one pixel in the image of a ship made the network 99.7% sure that it was a car.

![]()

Adversarial Machine Learning Attack Solutions

A robust DataOps and MLOps solution can introduce intentional noise and randomness into the training data and the model tuning, which significantly reduces the model’s vulnerability to such attacks. In many ML model attacks, the monitoring processes are the frontline that can alert and identify potential threats by looking for abnormal patterns and use.

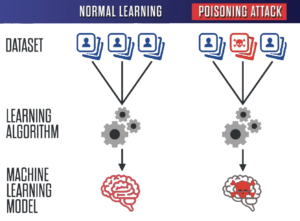

2. Data Poisoning Attack

In a data poisoning attack, the attacker identifies the source of raw data used to train the model, then strives to bias or “poison” the data to compromise the resulting machine learning model’s accuracy. The attacker needs access to raw data, meaning the hacker needs to be on the inside or use other methods to target employees to gain access.

Data Poisoning Attack Solutions

The best data poisoning solutions are prevention and detection. Production model monitoring of any data or concept drift can identify any phase shifts, imbalances, or shifts in data density and distribution. Through consistent monitoring, we can prevent the model from learning from compromised data.

3. Online Adversarial Attack

In an online adversarial attack, if the model is online learning from a continuous stream of new data, an attacker can manipulate the model’s learning by sending false data. If the model is learning from “fake news” or new, incorrect data, this can have ethical implications, such as learning from sexist, racist, or other hate speech. The manipulations of the attack are irrevocable since they operate on a transient data stream.

Online Adversarial Attack Solutions

A robust data ingestion process in the MLOps solution would identify such concentration of anomalous new data streams. Data versioning in the MLOps solution would allow the model to quickly roll back to an earlier unaffected model version.

“Gartner predicts that ‘through 2022, 30% of all AI cyberattacks will leverage training-data poisoning, AI model theft, or adversarial samples to attack AI-powered systems.’” – Microsoft

4. Distributed Denial of Service Attack (DDoS)

In a DDoS attack, hackers disrupt the model’s use by deliberately sending it complicated problems that will take the model a significant time to solve. This renders the model unusable for its intended purpose. This is possible when one or more computers are affected by malware, allowing them to be controlled by the hacker.

DDoS Attack Solutions

To combat this attack, a similar approach is taken to that of a traditional DDoS attack against web services. A resilient production model service architecture can stand up to sudden spikes in service requests. What’s unique about machine learning applications is that the inference instances are often optimized for machine learning. This can be more costly to scale up, so more mitigation measures should be taken.

5. Transfer Learning Attack

In transfer learning, a majority of models are based on solution architecture that is pre-trained and based on a larger data set. While there are many benefits to this method, there is the potential for a backdoor transfer learning attack. If someone trains an attack on the basic framework that the model is built on, it is likely that the attack will work on the current model as well.

![]()

Transfer Learning Attack Solutions

Transfer learning significantly lowers the development and adoption costs of cutting-edge machine learning models. However, to protect against transfer learning attacks, transfer learning models should be intentionally retrained against custom datasets. The model architecture should be tuned, and object functions updated to create enough variation in the production model that it won’t be as vulnerable to attacks tailored for those open-sourced root models.

6. Data Phishing Privacy Attack

A data phishing privacy attack occurs when there is an opportunity for hackers to reverse-engineer the dataset to break confidentiality when there are certain parameters to the data set, such as:

- the model is training confidential data

- the model is overfitted, meaning the model fits too closely to the training data set and is unable to generalize well to new data

- the model is trained only on a low quantity of records

An example of this type of data set would be in healthcare, within a hospital or clinic. There could be a lot of data on a patient record, but a small number of patient records.

Data Phishing Attack Solution

Statistical methods when validating the data, such as differential privacy or randomized data hold-out, can serve as an additional layer of privacy protection. There are also promising discoveries being made in the field of fully encrypted machine learning where the user of the ML model submits only encrypted input data, and the encrypted model is only exposed to the user. This ensures the privacy of the model and training data are fully protected.

“Healthcare beats out financial services as most at-risk for cyber attacks. In just one year, there were 100 million cyber breaches in the healthcare sector. Financial services were a close second in vulnerability, followed by the manufacturing, government, and legal sectors.” – fundera

Preventing Attacks on Your Data

Ultimately, ensuring the data being input into your ML model is not malicious in its volume, frequency, and distribution is paramount to receiving the correct output from the ML model and to the security of your data—and frankly, to the security of your organization.

Additionally, while there are many benefits to building new ML models from existing architecture (cost, effort, accuracy), without utilizing the proper security measures, this creates vulnerabilities to machine learning attacks in the newer models. ML models capable of online or continuous learning are also open to bias and misinformation and will need to be monitored closely.

Understanding and implementing ML model security and AI safety measures make it possible to scale and meet your biggest challenges while minimizing vulnerability and risk.