Client

Department of Defense

Market

Federal

Industry

National Security

Offerings

Artificial Intelligence & Analytics

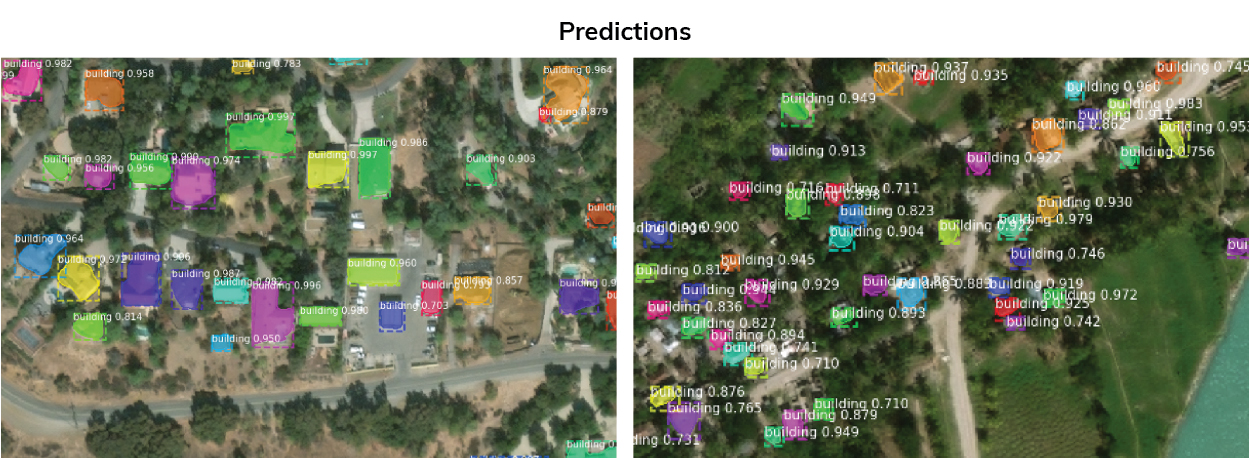

Last fall, Excella participated in the Department of Defense’s (DoD) Eye in the Sky Challenge. The goal of developing Machine Learning (ML) and computer vision algorithms to accelerate the analysis of satellite imagery and enhance disaster response was a perfect fit for our vision and mission. At Excella, we use technology to make bold new visions a reality. Eye in the Sky was a great opportunity to showcase our talents while helping to make an impact in the community. Through our participation in the competition, we were able to develop new technologies, demonstrate how they could enhance disaster response, and validate the best practices we’ve been using for our clients to enhance Automated Intelligence (AI) efforts using Agile and DevSecOps techniques.

Conclusion

For us and our team, Eye in the Sky was a great experience. It was an extremely effective example of how we regularly invest in the skills of our team members and experiment with

new techniques that we use with our most sophisticated clients. If one of our small teams can build a sophisticated infrastructure, select and tune complex algorithms, and submit a worthy entry into this high-profile competition using just 10 days, imagine what can happen with a fully committed team on a real-life project. If you want to learn more about how we’re bringing Agile, DevSecOps, and AI expertise to our clients outside of these competitions, read our JAIC Press Release.